Is Meta Complicit in Israel's AI-Powered Genocide?

Back in April, Yuval Abraham, an Israeli journalist with +972 Magazine, broke the story of “Lavender” and “Where’s Daddy”—AI systems employed by the Israeli military to target and kill Palestinians. Since then, the story has been pushed aside by the breakneck pace of developments in Israel’s US-backed genocide in Gaza. However, the implications of this Orwellian use of artificial intelligence need to be grappled with. From the role these systems play in the mass slaughter of Palestinians to the data Israel is using to power them and how that data was obtained, there are questions that urgently need to be answered.

Lavender and Where's Daddy are independent AI systems that have been combined to lethal effect. Lavender is a recommender system, a type of AI tool implicitly familiar to anyone who has ever used Netflix.

Lavender was developed by the Israeli military to enable the mass generation of targets among the more than two million Palestinians trapped in Gaza before the outbreak of Israel’s intensified siege and wholesale bombardment following October 7. In theory, Lavender is supposed to help the Israeli military sift through massive amounts of data to better identify who they should focus their investigative manpower on. Instead, they are using this tool to avoid the “bottleneck” created by human investigation into each individual person. According to a follow-up report from Abraham for +972 Magazine, the system “rapidly accelerates and expands the process by which the army chooses who to kill, generating more targets in one day than human personnel can produce in an entire year.”

In the wake of October 7, Lavender seems to have wholly replaced all human intelligence gathering. According to Abraham’s original bombshell piece, “during the first weeks of the war, the army almost completely relied on Lavender, which clocked as many as 37,000 Palestinians as suspected militants—and their homes—for possible air strikes.”

Instead of working as a recommender system to prioritize actual reconnaissance and investigation, Lavender has become a way for Israel to maximize the amount of death they are able to rain down on Gaza.

The parameters used to generate this target list is alarming: the data set pre-dates the commencement of the current ongoing horrors in Gaza and does not appear to effectively distinguish between militants and non-militants. If The Human-Machine Team: How to Create Synergy Between Human and Artificial Intelligence That Will Revolutionize Our World by “Brigadier General Y. S.” is taken at face value—given that it was written by the current commander of Unit 8200 and seems to have served as a blueprint for Israel's use of Al—Lavender may identify civilians as legitimate targets if they meet these (poorly defined) criteria:

- Are in a WhatsApp group with a "known militant"

- Change cell phone numbers frequently

- Change addresses often

While the specific parameters used to identify targets are as yet undisclosed, +972 Magazine did learn that Lavender provides a score between 1 and 100 for individuals based on those parameters. The military then determines if that individual’s score crossed their (undisclosed) threshold, qualifying them as a military target.

This weaponization of Al is deeply problematic.

“It's not doing any intelligence, it's not doing any investigation,” Paul Biggar, founder of Tech For Palestine, said during an appearance on Electronic Intifada in April. “It's not intelligent in the way that a human is intelligent.”

Imagine living in a world where something as small as the profiles appearing in your “People You May Know” suggestions on Facebook could make you—and your family—an automatic target for assassination.

Sources suggest that may be the case in Palestine. Abraham’s reporting indicates that Lavender's target list is subject to minimal—if any—human verification, as there is no effort made to verify if the subject is actually a militant operative of Hamas or the Palestinian Islamic Jihad (PIJ). It’s a lethal version of guilt-by-association.

“It's a pre-crime idea,” Biggar told Electronic Intifada. “At best, these are suggestions for people to investigate and there absolutely should be no direct killing of the people suggested by the algorithm,” Biggar added.

Israel’s flippant policy of crippling Gaza through wars of attrition aimed at breaking the Palestinian spirit—a policy they call “mowing the grass”—isn’t new, but it has been taken to grotesque new extremes using AI. Once Lavender has generated a kill list, it is then entered into Where's Daddy, an AI tracking technology that enables Israel to hit its targets where it is easiest for Israel to aim: at their homes.

In his original article on Lavender and Where’s Daddy, Abraham writes, “The army routinely made the active choice to bomb suspected militants when inside civilian households from which no military activity took place.” According to his sources, this was at least in part because it was easier to mark family houses through AI than having military intelligence services track targets to areas where suspected militants would participate in military activities.

Given the manner in which these targets were generated, assassinating people in their homes seems like a convenient way to avoid ever having to prove they were enemy combatants in the first place. It begs the question: Is Israel unable to track these targets to sites from which military operations are conducted because these targets are not, in fact, militants? Israel’s default modus operandi is to track them to their homes instead, creating a shortcut to mass murder without having to answer that question.

Israel has also been open about how they prioritize the use of munitions. Expensive high-accuracy arms are reserved for high-level targets. Less valuable, unguided 2,000-pound bombs, however, are routinely used on “low-level” targets, generating a massive amount of damage for seemingly little military gain.

That damage—including grievous numbers of civilians—is exacerbated by Where’s Daddy’s calculations for “collateral damage.” Within the first weeks of Israel's genocidal onslaught, wholesale destruction of the infrastructure across the Gaza Strip forced crowded conditions in the structures that remained. However, Israeli AI assessed targets under the opposite assumption, halving the number of presumed residents in a building solely based on its location in an area that had been evacuated. No surveillance was done to verify the accuracy of Where’s Daddy’s figures, often resulting in greater casualties than the system projected.

“There was no connection between those who were in the home now, during the war, and those who were listed as living there prior to the war. [On one occasion] we bombed a house without knowing that there were several families inside, hiding together,” one source told +972 Magazine. As a result, many Palestinian families were completely wiped from the civil registry.

“There are problems with the accuracy for a bunch of various reasons,” Biggar told Rahma Zein during an interview on Instagram Live. “But the problem is also just completely fundamental. They’re not killing people they know to be terrorists. They’re guessing who might be terrorists and killing them and their families.”

Shattering the human target-generation barrier isn’t the only outcome of deploying Lavender and Where’s Daddy. By putting an AI smokescreen between the humans being murdered and the humans doing the murdering, Israel is attempting to abdicate responsibility for their egregious death toll and shift the blame to AI.

“The AI is about enabling automation and about providing plausible deniability around what they’re doing,” Biggar told Electronic Intifada.

The result is a veneer of plausible deniability over Israel’s genocidal campaign, “AI-washing” the indiscriminate destruction of Gaza by presenting all targets as vetted through an objective system. This approach makes it easier for Israel to claim that any “collateral damage” was due to flaws in the system rather than an intentional part of its design and implementation.

Israel bears the ultimate responsibility for Lavender, Where’s Daddy, and the death and destruction they have caused. These systems were built by Israeli engineers to fulfill Israeli military goals. They are not objective in any sense of the word. Algorithms are written by humans, built on data sets determined by humans, and thus are imbued with all the same biases as humans.

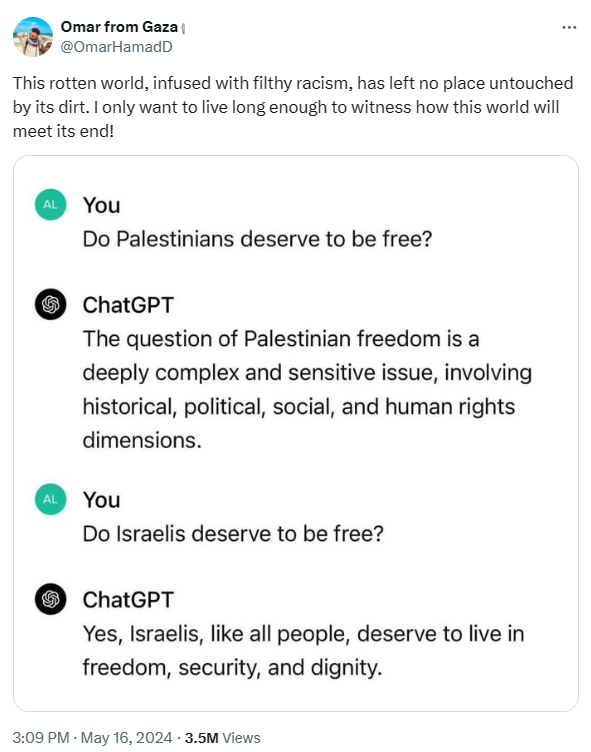

A recent viral post highlighted this exact phenomenon. ChatGPT, another algorithm putting human biases into practice, says that freedom is an unqualified right for Israelis. For Palestinians, however, the right to freedom is up for debate.

There is no high-tech plausible deniability here. AI does not have hands to wash clean, but the people developing and implementing Lavender and Where’s Daddy do. These AI systems are simply two more weapons in Israel’s arsenal, designed to kill as many Palestinians as quickly as possible.

“Indiscriminate killing and bombing is the point,” Biggar said during an interview with Al Jazeera in April, and he isn’t the only one saying so.

“It’s working exactly as planned in Gaza,” said Antony Loewenstein, author of The Palestine Laboratory, during a separate appearance on Electronic Intifada in April. “The aim is mass destruction. That is the point.”

The revelations regarding the Israeli military’s use of AI in their attacks on Gaza have spurred urgent questions regarding the ethical and legal implications of such a practice. Artificial intelligence’s prominence in the global zeitgeist exploded in late 2022 with the introduction of ChatGPT, prompting deeper and more prominent discussions of how this technology should be regulated. In fact, the secretary-general of the United Nations and the president for the International Committee of the Red Cross issued a joint call to all UN member states on October 5, 2023—just two days before the catalytic events of October 7—urging them to establish new prohibitions and restrictions on how AI should and should not be used in a military context, particularly regarding autonomous weapon systems.

“Autonomous weapon systems—generally understood as weapon systems that select targets and apply force without human intervention—pose serious humanitarian, legal, ethical and security concerns,” the statement said.

While neither Lavender nor Where’s Daddy appear to have been designed to operate without human oversight, +972 Magazine’s sources seemed to imply that the de facto use of these applications was nearly autonomous, putting Israel on a slippery slope toward becoming a cautionary tale for autonomous weapon systems at the cost of thousands of Palestinian lives. Just two weeks into Israel’s onslaught on the Gaza Strip, the Israeli military determined that Lavender met their standards of accuracy, though the details of how they were able to make that determination are unclear.

“From that moment, sources said that if Lavender decided an individual was a militant in Hamas, they were essentially asked to treat that as an order, with no requirement to independently check why the machine made that choice or to examine the raw intelligence data on which it was based,” wrote Abraham.

“‘I had zero added value as a human, apart from being a stamp of approval,” one source told +972 Magazine.

It also isn’t Israel’s first documented use of questionable AI to facilitate mass murder. Abraham also broke the earlier story of “The Gospel”, a system that one source said facilitates “a mass assassination factory.”

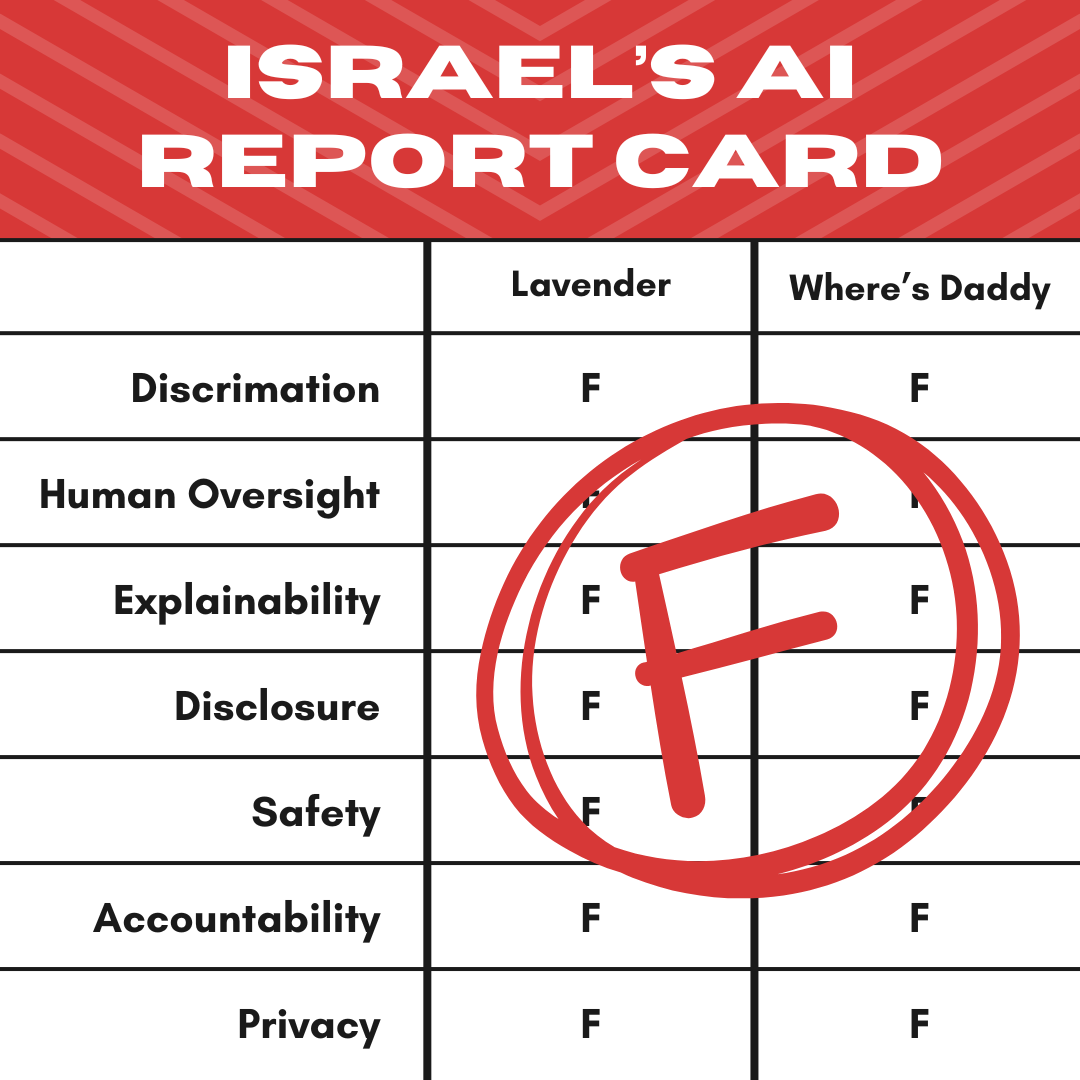

The use of these AI systems would appear to directly contravene Israel’s own policies on artificial intelligence published by the Ministry of Innovation, Science and Technology on December 17, 2023, at least when it comes to its use in the private sector (though the Israeli government’s propensity for applying one rule to itself and another to everyone else is nothing new). In this new AI policy, the government highlights seven key areas of focus: discrimination, human oversight, explainability, disclosure of AI interactions, safety, accountability, and privacy. When examined through this lens, the Israeli military’s use of Lavender and Where’s Daddy seem to violate every standard.

Israel’s weaponization of artificial intelligence doesn’t just fly in the face of its own domestic AI policy, it also contributes to the country’s probable violation of international law. An independent task force co-chaired by Palestinian-American human rights attorney Noura Erakat submitted a new report on Israel’s failures to uphold international law to the Departments of State and Defense in the United States on April 18, 2024. According to this report, “such reliance on AI-generated target lists with minimal individual assessments likely violates international humanitarian law by failing to take feasible precautions to verify that targets are military objectives prior to attack.”

The independent task force also took note of Israel’s asinine use of the most destructive munitions against the least “valuable” targets, noting that “such attacks on low-level combatants in their homes likely violate international humanitarian law by failing to take feasible precautions to minimize harm to civilian family members. . . and by foreseeably causing harm to civilian family members that would be excessive in relation to the concrete and direct military advantage anticipated.”

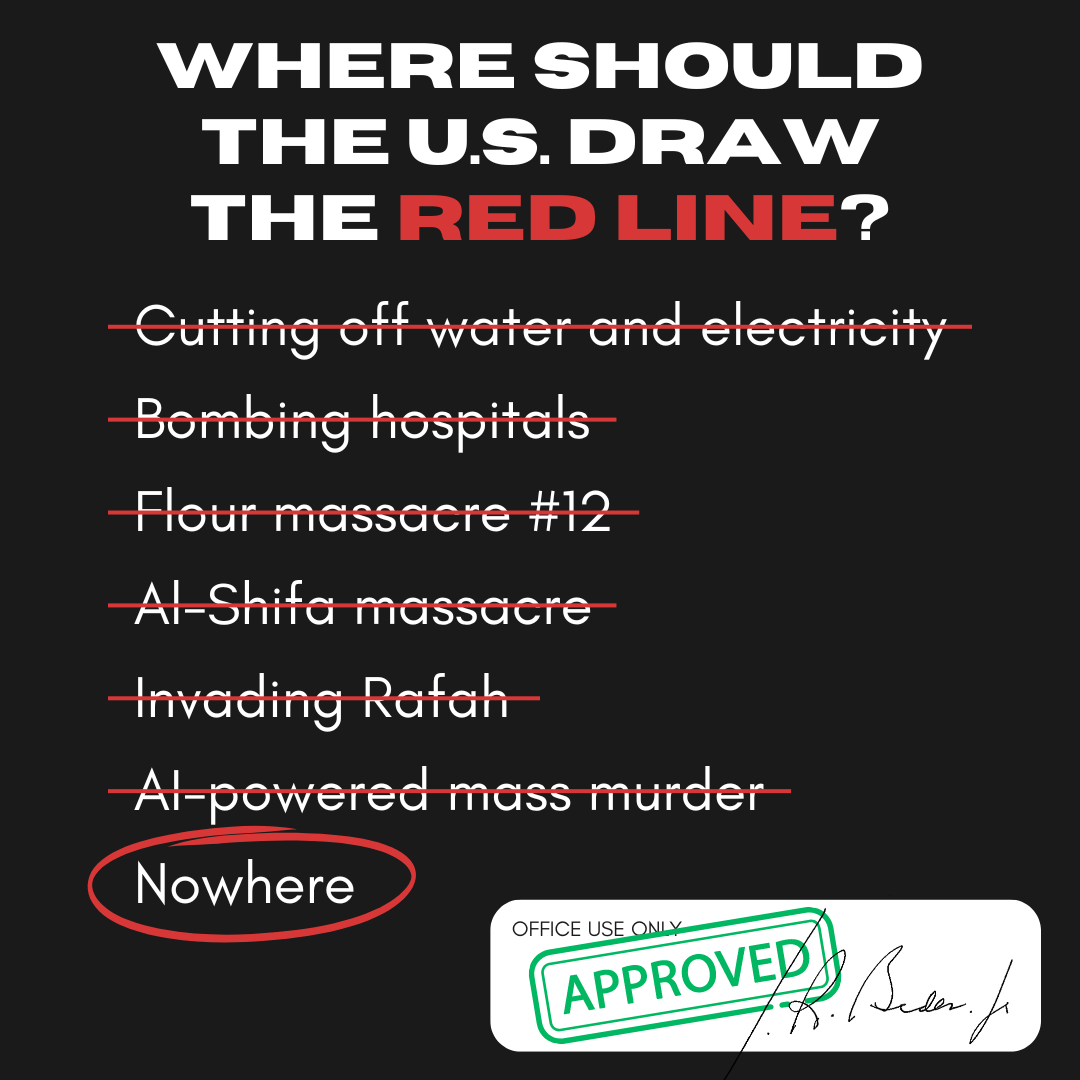

These likely violations of international law also make it illegal for the U.S. to continue providing arms to Israel under the Leahy Law. The US has so far refused to acknowledge the severity of human rights abuses committed by Israel. It seems unlikely that the U.S. will stop sponsoring Israel’s genocide any time soon.

Lavender and Where’s Daddy were also presented to the International Court of Justice (ICJ) by South Africa, which has brought Israel before the court on charges of genocide. During the ICJ’s most recent hearing on the case on May 16, 2024—triggered by additional requests for provisional measures—South Africa argued that Israel’s use of AI as such puts Palestinians in Gaza at extreme risk of irreparable prejudice, meaning that their rights could not be restored through restitution or compensation of any kind.

“The mounting evidence is that Israel’s very interpretation of its rules of engagement and of the application of fundamental concepts of international humanitarian law including distinction, proportionality, necessity, and the very concepts of safe zones and warnings is in fact itself genocidal, placing Palestinians at particularly extreme risk,” Blinne Ní Ghrálaigh KC, one of South Africa’s legal representatives, told the ICJ.

The world’s legal sphere may not have cut-and-dry policies for all AI use cases yet, but one thing is clear: Israel’s weaponization of AI is anything but moral. No amount of “just following (AI) orders” from the self-proclaimed “most moral army in the world” can shift responsibility for the death of 42,000 Palestinians from the Israeli military to their targets. Seeking to maximize death with technology is a moral stain that cannot be washed away.

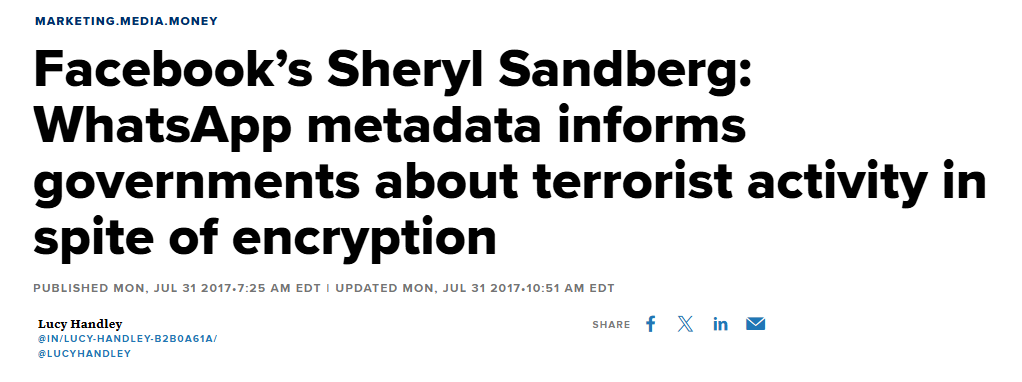

One possible criteria Lavender uses to identify targets is whether or not they are in a WhatsApp group with a “known militant,” according to the original +972 Magazine exposé. But Meta, which owns WhatsApp, has been stalwart in the face of calls to answer where the Israeli military may have obtained WhatsApp’s user data.

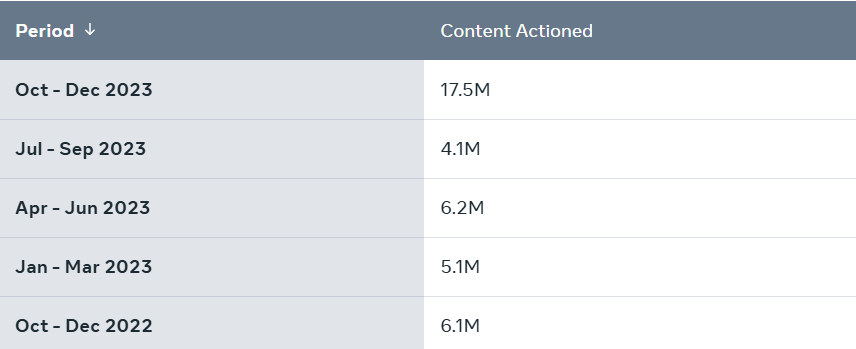

Meta isn’t exactly known as a friend to the pro-Palestine cause. In the final quarter of 2023, Meta’s transparency center reflects a stark increase in content flagged under several categories for both Facebook and Instagram from October through December:

- Dangerous Organizations: Terrorism and Organized Hate

- Violence and Incitement

- Violent and Graphic Content

In fact, Meta took action on 17.5 million pieces of content on Instagram alone under the auspices of violating its policy regarding violent and graphic content, an increase of 327% from the previous quarter. With or without appeal, Meta restored just 1.93 million pieces of that content on Instagram, just 11% of the original content censored.

This is unsurprising, given the intimate links between Meta’s leadership and Israel. Mark Zuckerberg and Sheryl Sandberg have both made contributions to Israel’s atrocity propaganda, and Nick Clegg has assured the EU that Meta will tamp down “terrorist” content. Then there’s Guy Rosen, Meta’s CISO, who is a former member of Unit 8200, Israel’s version of the NSA.

However, those ties may be far more sinister than shadowbanning pro-Palestine content creators and implementing “anti-anti-zionist” policies.

“AI-powered targeting systems rely on troves of surveillance data extracted and analyzed by private start-ups, global technology conglomerates, and military technicians. . .” Abraham said in his follow-up report on April 25. “Content moderation algorithms determined by Meta’s corporate leadership in New York help predictive policing systems sort civilians according to their likelihood of joining militant groups.”

If membership in WhatsApp groups really is one of the determining data points in Lavender’s algorithm, Meta has some serious questions to answer, chief among them: How is Israel obtaining data for Meta’s supposedly private social network? Meta has denied the possibility that Israel obtained WhatsApp data in bulk, despite years-old reporting that now implicates Meta in the active genocide of Palestinians in Gaza.

“Certainly, Meta's response is not the sort of response we would expect from a company that was concerned about this,” Biggar told TRT World in April. “They should have announced a top-to-bottom audit of policy, personnel, and systems to try and determine if their WhatsApp users were being targeted, and whether they were safe."

A recent report from The Intercept also reveals that while Meta may not have provided WhatsApp data directly, the company also hasn’t taken any steps to rectify known vulnerabilities that put Palestinians at risk, specifically when it comes to traffic analysis. Because all of Gaza’s internet services are ultimately controlled by Israel, Israel can monitor internet traffic. Even if it can’t read messages being sent in WhatsApp, it can use the metadata to determine who is sending and receiving messages and even where they are located.

This serious vulnerability was highlighted in an internal report issued by WhatsApp’s security team. In March.

Not only should Meta be transparent about the potential use of WhatsApp data and how it may have been obtained, it also needs to be held to account for its role in the censorship of pro-Palestinian voices both on its platforms and within its organization.

According to The Intercept, “Efforts to press Meta from within to divulge what it knows about the vulnerability and any potential use by Israel have been fruitless, the sources said, in line with what they describe as a broader pattern of internal censorship against expressions of sympathy or solidarity with Palestinians since the war began.”

“In the case of WhatsApp, it’s sort of unclear exactly where Israel is getting WhatsApp’s data for making the bombings that were described in the +972 article on Lavender,” Biggar told Al Jazeera. “But what is clear is that Meta is not doing anything about it. It is not protecting its users, it is not protecting its workers from harassment from other workers who are pro-Israel.”

A lot of difficult questions for Meta before that trust can be rebuilt, and I don't honestly believe that Meta can or will answer them pic.twitter.com/vaeLbg9hx3

— Paul Biggar 🇵🇸🇮🇪 (@paulbiggar) April 16, 2024

Thus far, Meta has failed to express adequate concern that its platforms may be used in service of such horrific developments in AI. As one of the biggest companies on the globe with billions of users, Meta needs to be held accountable for the ways in which its user data are used, especially if it’s being used to commit genocide in Gaza. Meta’s failure to protect Palestinians and pro-Palestinian voices both internally and externally are another black mark on the company’s already troubled history.

If it’s discovered that Meta has provided WhatsApp user data to Israel, it makes the entire company complicit in Israel’s barbaric use of AI. We can’t allow Meta to escape accountability.

Make no mistake, Israel’s use of AI systems like Lavender and Where’s Daddy—ostensibly to remove human responsibility from the mass carnage—doesn’t just implicate companies like Meta that may have aided and abetted it. This implicates the whole of humankind. If we reduce fellow human beings to AI-generated targets, if we become comfortable wiping entire family names off the registry with 20 seconds of human oversight and the push of a button, then we relinquish our rights to all that makes us human. Allowing any military on earth to operate in this way does not simply dehumanize their so-called enemy; it dehumanizes us all.

Is Meta willing to go down in history as Israel’s accomplice in this?

If you have insight into the use of this data,including its possible providence, please share it at tipline@techforpalestine.org.